I am a software developer and musician with a passion for discovering new ways of generating, transforming, and experiencing sound.

For the past 8 years, I have worked with small multidisciplinary teams to build interactive experiences for cultural institutions and brands including Denver Botanic Gardens, SFMOMA, The National Archives, Adidas, Occidental College, Coca Cola, Epson, and SEGD. I am also the creator of Borderlands Granular, an expressive multitouch interface for granular synthesis on the iPad. At 9 years old, Borderlands has a worldwide community of users and has found its way into to film scores, live performances, educational workshops, and field recording expeditions. It has been called "a reason to own an iPad" by Peter Kirn of Create Digital Music.

Please have a look at my work, and say hello at carlsonc (at) ccrma.stanford.edu. I would love to hear from you!

ZKM App Art Award - Special Prize for Sound Art, 2015

Prix Ars Electronica - Award of Distinction in Digital Musics and Sound Art, 2013.

Sónar Festival of Advanced Music and New Media Art, Live Performance, 2013.

Borderlands Granular is a new musical instrument for exploring, touching, and transforming sound with granular synthesis, a technique that involves the superposition of small fragments of sound, or grains, to create complex, evolving timbres and textures.

The software enables flexible, real-time improvisation and is designed to support interaction with sonic material on a fundamental level. The user is envisioned as an organizer of sound, simultaneously assuming the roles of curator, performer, and listener. Gestural interaction and visual feedback are emphasized over knobs and sliders to encourage a sculptural and spatial approach to making music.

Please see the press kit for more information, or have a look at the CDM review of version 2.0

Commissioned sound for a VR project by Jeremy Rotsztain.

Real-time sound score in Max.

"House of Shadow Silence" is a audio/visual VR experience that transports audience to the Film Guild Cinema. Frederick Kiesler, a De-Stijl artist, designed for the iconic cinema so that "the spectator must be able to lose himself in an imaginary endless space." One of its groundbreaking innovations was a "screen-o-scope"–a device for projecting onto the walls and ceiling so that viewers would be immersed in the drama on screen. When the cinema opened in New York City in 1929, its multimedia elements were left out due to budgetary constraints.

In "House of Shadow Silence," viewers float through the cinema–through the mechanical eye at the front, and into the filmic grain of the projected films. Looking around, they see geometric forms animating from the walls and ceiling. 1920s silent film compositions are sampled, granulated, and layered in real time, shifting in space with the viewer's gaze.

A project by Second Story.

Generative audio programming and sound design in Max.

To mark the opening of a milestone real estate project in Charlotte, Second Story created “Unify”, an atmospheric, generative artwork.

The sound component consists of six multichannel granular synthesis engines with parameters driven by data extracted from the generative graphics. The sound is designed with public occupancy in mind, avoiding repetition in favor of constantly evolving texture.

Commissioned by the biannual wats:ON Festival at Carnegie Mellon University, in collaboration with Jake Marsico of Ultra Low Res Studio.

BODY DRIFT is an immersive audiovisual performance that uses video-driven animation and multi-channel sound to examine the subtle shifts that take place in the development and degradation of sensory perception.

The sound score was developed in parallel with Jakob Marsico's visual treatment, using Ableton Live's Connection Kit and custom OSC messaging to communicate timeline information and audio event information between Ableton and Touch Designer in the final performance. The full score can be heard here:

A project by Second Story.

Touch interactives, responsive environment engine, and LED control software. openFrameworks and Processing

In the fall of 2014, the Denver Botanic Gardens opened its Science Pyramid, a space dedicated to highlighting the institution’s scientific research and conservation efforts. Second Story created the permanent exhibit Learning to See, sharing with visitors stories of plants, ecosystems, and science through digital and physical interactives, large-scale graphics, and a lighting environment that responds to the weather at the Gardens.

Learning to See finds inspiration in the geography, landscapes, and plants of Colorado. The exhibit is designed to evoke the feeling of an aspen glade, and contrast of scale is used to mimic the state’s vast elevation differences. Three interactive tables, boulder-like in form, provide grounding information throughout the visitor’s journey and are surrounded by tree-like pylons that each host individual interactive experiences. Activated surfaces throughout the space, as well as the interactive software itself, respond to the temperature and wind speed in the Gardens through color and animation, with technology serving as a bridge between the Pyramid’s interior and the natural world just outside.

A project by Second Story.

Technical Director, Software Development, and Sound / Composition.

Nestled between commercial and residential spaces in downtown Portland, Lawrence Halprin’s Open Spaces Sequence is regarded as one of the most important pieces of late 20th century public art in the US. Our goal was to stay true to Halprin’s original intent of creating a civic space choreographed for movement and performance while highlighting the late Modernist aesthetic of the fountain. Using the fountain as the armature, we created a luminescent string sculpture that extended the radiating geometry of the fountain and created a stage for interaction. A console at the foot of the fountain invited visitors to highlight the natural beauty of Halprin’s architecture with focused beams of light, resulting in a continuously evolving interplay of color, shadow, and structure. A sparse, textural soundscape accompanied the interaction and shifted in and out of focus in response to the changing light environment.

This recording documents a live performance with two instances of Borderlands Granular at the 2013 Ars Electronica Festival in Linz, Austria. Borderlands was honored with an Award of Distinction in Digital Musics and Sound Art. In addition to the live performance, the app was exhibited for several weeks in the annual Cyberarts Exhibition in Linz.

A project by Second Story.

Software development in openFrameworks

How do you create a single space that serves two distinct groups of museumgoers: those hungry for more insight and those looking to take a break? For SFMOMA’s grand reopening, we designed a new space that seamlessly blends cafe and art gallery, digital and physical.

Interactive installations let visitors explore how photography shapes our perceptions of the world. Visitors engage using custom analog controls inspired by 35mm film camera dials. From the materials and finishes, to the centralized seating and quiet integration of media, the gallery lets visitors flow through, socialize, dive deep into the museum collection, and relax and sip their lattes with equal ease.

A project by Second Story.

Software lead, kiosk development, generative visualization in openFrameworks

What if integrating genetic medicine into your regular primary care regimen could make you healthier and lower your healthcare costs? This is the vision behind Sanford Imagenetics. We built an immersive installation to get patients onboard with this revolutionary change.

In a patient thoroughfare at the heart of the Sanford Health campus, we used a hanging light sculpture, two floor displays and responsive technology to turn a regular doctor’s appointment into a disarming first encounter with genetic medicine.

The floor displays issue playful invitations to engage, eliciting voice and facial inputs from passersby. This biometric data triggers an on-screen visualization—inspired by the unique individual patterns of DNA—which is then reflected in the twinkling animations of the light sculpture overhead. By using the entire environment as our storytelling canvas, we sparked curiosity about genetics and introduced patients to a realm of wondrous possibility for health care.

A project by Second Story.

Master wall scheduler, client applications, software architecture. Music for project video.

Global Crossroads is a dynamic, large-scale environmental installation driven by web technologies and content created within an accompanying web app.

The centerpiece of the new informal learning space at Occidental College’s historic Johnson Hall, recently reimagined by Belzberg Architects, Global Crossroads is a two-story wall of sculpted glass with reactive LED lighting and ten embedded displays that showcase the depth and dimension of student work being developed and explored. Student research, dispatches from around the globe, and other curated content generated using the web app enliven the space, providing an insight into the breadth of experience that shapes a liberal arts education.

This interactive sound and light sculpture is inspired by the behavior of synchronous fireflies, which interact through a process called pulse-coupled oscillation. A network of tuned electronic chirping and blinking entities is suspended from a large sculpture. These creatures initially pulse at random rates, out of phase with each other. When a single creature "fires," its neighbors slightly adjust their cycles to try to match phase with the firing oscillator. Eventually the entire group converges, only to be disturbed by the sudden presence of an onlooker.

PCO was exhibited at SOMArts in San Francisco for the 2011 edition of CCRMA Modulations.

Composition and software for 16 performers with hemispherical speaker arrays. Each participant is outfitted with a GameTrack 6 axis continuous controller with mappsings to control a custom 6 channel granular synthesizer (Max/MSP). Performers continuously affect the volume, pitch, grain size, and playhead position. One individual from the group is the conductor, selecting the current audio file that is sampled by every perfomer via a footswitch.

The performance documented in this video took place in the construction site of the Bing Concert Hall at Stanford University. The ensemble is the 2012 Stanford Laptop Orchestra (SLOrk).

"Morning Frost" featured in the 2015 Academy Award-Nominated

Short Documentary, White Earth, by J. Christian Jensen.

A collection of recordings composed and recorded in 2011/2012. Compiled and released in 2014. Viola on “Morning Frost” performed by Hunter McCurry.

A project by Second Story.

Multitouch app and multiple resolution LED environment. Music for project video.

"On December 2, 2014, over 200 media, technology, and design professionals were invited to the LED LAB in TriBeCa where they became conductors of their space, a beautiful atrium of uniquely shaped LED screens. With the goal of educating guests about LED technology in a fun and dynamic way, the experience began with an interactive table. Our team was inspired by fireflies, specifically their similarity to LED technology in how they illuminate. Guests ‘caught’ uniquely colored and shaped animated fireflies and could release them to eight different LED screens. Through interacting with the fireflies on the table, guests learned about LED resolution and pixel pitch. On the surrounding LED screens, we created ambient, animated landscapes that enveloped the room. Guests could send their fireflies into the environment and experience their pixel pitch lessons in real-time."

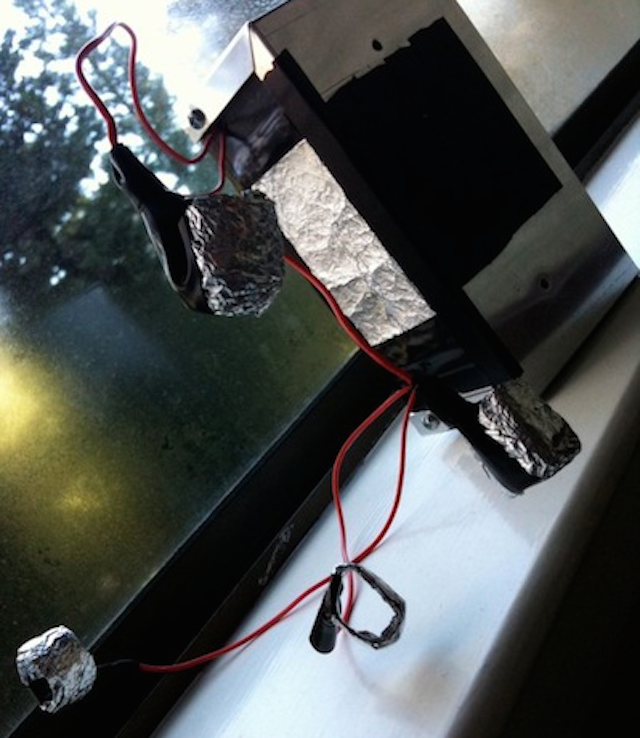

The Feedbox is a prototype handheld feedback machine. Performers strap their fingers into each ring and touch the box to create feedback paths between microphones around the enclosure and a battery powered speaker contained inside the box. While the connections between the speaker and the primary contact surface on the outside of the box could have been made internally, an input and output jack were added to reinforce the fact that this instrument is designed for feedback. Users must plug the instrument into itself before performing.